Google Colaboratoryを使って、M5Stack Core2用のTensorFlow liteモデル「Magic Wand」を作成します。

Google Colaboratoryの環境設定

「Google Colaboratoryを使ってYOLOv4-tinyで物体検出」の「Google Colaboratoryの環境設定」に従って、Google ColaboratoryでGPUが利用できる環境に設定ます。

ただし、作成するフォルダ名は「M5StackCore2」とします。pathは「/content/drive/My\ Drive/M5StackCore2/」になります。作成したスクリプトは「train_magic_wand_model.ipynb」として保存します。

TensorFlow liteモデル「Magic Wand」の作成

TensorFlow liteモデル「Magic Wand」は「Gesture Recognition Magic Wand Training Scripts」の「Training in Colab」に従って作成します。

- Configure dependencies

- Prepare the data

- Load TensorBoard

- Begin training

- Create a C source file

次のコマンドでTensorFlowリポジトリをフォルダ「M5StackCore2」にクーロンし、TensorFlowリポジトリの「train」をフォルダ「M5StackCore2」の「train」にコピーします。

%%bash cd /content/drive/My\ Drive/M5StackCore2 git clone --depth 1 -q https://github.com/tensorflow/tflite-micro cp -r tflite-micro/tensorflow/lite/micro/examples/magic_wand/train train

フォルダ「M5StackCore2」の構成は次のようになります。

次のコマンドでデータをダウンロードして展開し、フォルダ「M5StackCore2」の「train」にコピーします。

%%bash cd /content/drive/My\ Drive/M5StackCore2 wget http://download.tensorflow.org/models/tflite/magic_wand/data.tar.gz tar xvzf data.tar.gz -C train 1>/dev/null

次のようなメッセージが出力されます。

--2022-07-29 06:59:25-- http://download.tensorflow.org/models/tflite/magic_wand/data.tar.gz

Resolving download.tensorflow.org (download.tensorflow.org)... 142.251.12.128, 2404:6800:4003:c11::80

Connecting to download.tensorflow.org (download.tensorflow.org)|142.251.12.128|:80... connected.

HTTP request sent, awaiting response... 200 OK

Length: 451862 (441K) [application/x-gzip]

Saving to: ‘data.tar.gz’

0K .......... .......... .......... .......... .......... 11% 32.9M 0s

50K .......... .......... .......... .......... .......... 22% 76.1M 0s

100K .......... .......... .......... .......... .......... 33% 61.8M 0s

150K .......... .......... .......... .......... .......... 45% 60.4M 0s

200K .......... .......... .......... .......... .......... 56% 70.9M 0s

250K .......... .......... .......... .......... .......... 67% 110M 0s

300K .......... .......... .......... .......... .......... 79% 106M 0s

350K .......... .......... .......... .......... .......... 90% 107M 0s

400K .......... .......... .......... .......... . 100% 86.6M=0.006s

2022-07-29 06:59:25 (69.0 MB/s) - ‘data.tar.gz’ saved [451862/451862]

tar: Ignoring unknown extended header keyword 'SCHILY.dev'

tar: Ignoring unknown extended header keyword 'SCHILY.ino'

tar: Ignoring unknown extended header keyword 'SCHILY.nlink'

tar: Ignoring unknown extended header keyword 'LIBARCHIVE.creationtime'

tar: Ignoring unknown extended header keyword 'SCHILY.dev'

tar: Ignoring unknown extended header keyword 'SCHILY.ino'

tar: Ignoring unknown extended header keyword 'SCHILY.nlink'

tar: Ignoring unknown extended header keyword 'SCHILY.dev'

フォルダ「M5StackCore2」の「train」の構成は次のようになります。

次のコマンドでスクリプトを実行して、データをtraining, validation, test セットに分割してフォルダ「M5StackCore2」の「train」に作成します。

%%bash cd /content/drive/My\ Drive/M5StackCore2 cd train python data_prepare.py python data_split_person.py

次のようなメッセージが出力されます。

data_length: 981 data_length:981 train_length:653 valid_length:136 test_length:192

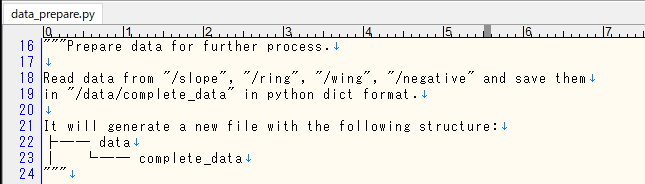

「data_prepare.py」では次の処理が行われ、フォルダが作成されます。

「data_split.py」では次の処理が行われ、フォルダが作成されます。

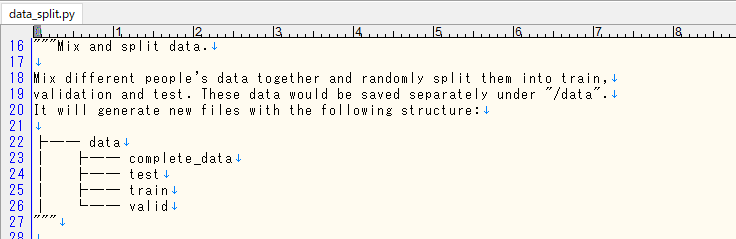

次のコマンドでTensorBoardを実行し、学習状況の正確ドと損失度をグラフで表示します。

# Load TensorBoard %load_ext tensorboard %tensorboard --logdir logs/scalars

次のようなメッセージが出力されます。

The tensorboard extension is already loaded. To reload it, use: %reload_ext tensorboard Reusing TensorBoard on port 6006 (pid 266), started 0:00:36 ago. (Use '!kill 266' to kill it.)

表示されたTensorBoardのグラフを次に示します。Localhostに表示されるために「xampp」を起動しておきます。

次のコマンドで学習を実行します。GPUランタイムで5分くらいかかります。

%%bash cd /content/drive/My\ Drive/M5StackCore2 cd train python train.py --model CNN --person true

次のようなメッセージが出力されます。

Start to load data...

train_data_length:16325

valid_data_length:136

test_data_length:192

Start to build net...

Built CNN.

Start training...

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d (Conv2D) (None, 128, 3, 8) 104

max_pooling2d (MaxPooling2D (None, 42, 1, 8) 0

)

dropout (Dropout) (None, 42, 1, 8) 0

conv2d_1 (Conv2D) (None, 42, 1, 16) 528

max_pooling2d_1 (MaxPooling (None, 14, 1, 16) 0

2D)

dropout_1 (Dropout) (None, 14, 1, 16) 0

flatten (Flatten) (None, 224) 0

dense (Dense) (None, 16) 3600

dropout_2 (Dropout) (None, 16) 0

dense_1 (Dense) (None, 4) 68

=================================================================

Total params: 4,300

Trainable params: 4,300

Non-trainable params: 0

_________________________________________________________________

None

Model size: 16.796875 KB

Epoch 1/50

1000/1000 [==============================] - 7s 4ms/step - loss: 8.9230 - accuracy: 0.3801 - val_loss: 0.8341 - val_accuracy: 0.5662

Epoch 2/50

1000/1000 [==============================] - 4s 4ms/step - loss: 1.1010 - accuracy: 0.4501 - val_loss: 0.8819 - val_accuracy: 0.5993

Epoch 3/50

1000/1000 [==============================] - 6s 6ms/step - loss: 1.0321 - accuracy: 0.4951 - val_loss: 0.8858 - val_accuracy: 0.6103

Epoch 4/50

1000/1000 [==============================] - 4s 4ms/step - loss: 0.9861 - accuracy: 0.5147 - val_loss: 1.0422 - val_accuracy: 0.5662

Epoch 5/50

1000/1000 [==============================] - 4s 4ms/step - loss: 0.9480 - accuracy: 0.5360 - val_loss: 0.8421 - val_accuracy: 0.6801

Epoch 6/50

1000/1000 [==============================] - 4s 4ms/step - loss: 0.8979 - accuracy: 0.5636 - val_loss: 0.8140 - val_accuracy: 0.6875

Epoch 7/50

1000/1000 [==============================] - 4s 4ms/step - loss: 0.8745 - accuracy: 0.5818 - val_loss: 0.7369 - val_accuracy: 0.7169

Epoch 8/50

1000/1000 [==============================] - 4s 4ms/step - loss: 0.8375 - accuracy: 0.5942 - val_loss: 0.6896 - val_accuracy: 0.7243

Epoch 9/50

1000/1000 [==============================] - 4s 4ms/step - loss: 0.7905 - accuracy: 0.6272 - val_loss: 0.4391 - val_accuracy: 0.7868

Epoch 10/50

1000/1000 [==============================] - 4s 4ms/step - loss: 0.6900 - accuracy: 0.6856 - val_loss: 0.5140 - val_accuracy: 0.7757

Epoch 11/50

1000/1000 [==============================] - 4s 4ms/step - loss: 0.6323 - accuracy: 0.7058 - val_loss: 0.4334 - val_accuracy: 0.8051

Epoch 12/50

1000/1000 [==============================] - 4s 4ms/step - loss: 0.5849 - accuracy: 0.7181 - val_loss: 0.6492 - val_accuracy: 0.6912

Epoch 13/50

1000/1000 [==============================] - 4s 4ms/step - loss: 0.5547 - accuracy: 0.7294 - val_loss: 0.5100 - val_accuracy: 0.7721

Epoch 14/50

1000/1000 [==============================] - 4s 4ms/step - loss: 0.5416 - accuracy: 0.7276 - val_loss: 0.3343 - val_accuracy: 0.8162

Epoch 15/50

1000/1000 [==============================] - 4s 4ms/step - loss: 0.5203 - accuracy: 0.7303 - val_loss: 0.3086 - val_accuracy: 0.8456

Epoch 16/50

1000/1000 [==============================] - 4s 4ms/step - loss: 0.4582 - accuracy: 0.7937 -

・・・

6/6 [==============================] - 0s 3ms/step - loss: 0.1415 - accuracy: 0.9531

tf.Tensor(

[[ 74 8 0 0]

[ 0 82 0 2]

[ 1 0 86 1]

[ 0 4 2 124]], shape=(4, 4), dtype=int32)

Loss 0.14154477417469025, Accuracy 0.953125

Basic model is 20224 bytes

Quantized model is 9696 bytes

Difference is 10528 bytes

Training finished!

2022-07-29 21:13:23.554932: W tensorflow/core/common_runtime/gpu/gpu_bfc_allocator.cc:39] Overriding allow_growth setting because the TF_FORCE_GPU_ALLOW_GROWTH environment variable is set. Original config value was 0.

2022-07-29 21:16:48.768064: W tensorflow/python/util/util.cc:368] Sets are not currently considered sequences, but this may change in the future, so consider avoiding using them.

2022-07-29 21:16:49.846156: W tensorflow/compiler/mlir/lite/python/tf_tfl_flatbuffer_helpers.cc:357] Ignored output_format.

2022-07-29 21:16:49.846207: W tensorflow/compiler/mlir/lite/python/tf_tfl_flatbuffer_helpers.cc:360] Ignored drop_control_dependency.

WARNING:absl:Buffer deduplication procedure will be skipped when flatbuffer library is not properly loaded

2022-07-29 21:16:51.409920: W tensorflow/compiler/mlir/lite/python/tf_tfl_flatbuffer_helpers.cc:357] Ignored output_format.

2022-07-29 21:16:51.409969: W tensorflow/compiler/mlir/lite/python/tf_tfl_flatbuffer_helpers.cc:360] Ignored drop_control_dependency.

WARNING:absl:Buffer deduplication procedure will be skipped when flatbuffer library is not properly loaded

次のコマンドで「train.py」で作成したモデル「 model.tflite」をCソースファイル「model.cc」に出力します。

%%bash cd /content/drive/My\ Drive/M5StackCore2 cd train apt-get -qq install xxd xxd -i model.tflite > model.cc cat /model.cc

次のようなメッセージが出力されます。

Selecting previously unselected package xxd. (Reading database ... 155673 files and directories currently installed.) Preparing to unpack .../xxd_2%3a8.0.1453-1ubuntu1.8_amd64.deb ... Unpacking xxd (2:8.0.1453-1ubuntu1.8) ... Setting up xxd (2:8.0.1453-1ubuntu1.8) ... Processing triggers for man-db (2.8.3-2ubuntu0.1) ... cat: /content/model.cc: No such file or directory

Cソースファイル「model.cc」は次のように作成されます。